Apache, the 500 pound gorilla

My notes and thoughts about a recent infrastructural change of the BlueOnyx YUM repositories.

The Apache webserver is the metaphorical 500 pound gorilla in the monkey cage. It can do pretty much anything you ask it for. But oh boy ... it's usuall never easy. If you ask it for a banana, it asks which plantation you want it from, if that banana ought to be ripe or green, what it's curvation radius ought to be (if the banana is delivered to the European Union), if you want it peeled, possibly sliced as well, served on ice, cooked, baked or raw. And that's typically just the start of it. You'll have to wait for that gorilla to turn around, pluck a banana and hand it to you.

There are other webserver alternatives, which are - from the start - less bitchy. Take nginx for example. Freshly installed in the default configuration it can't even serve Perl scripts or PHP. Unless you tell it how to do that (and there are various alternatives to choose from). But it's a squirrely, agile and fast little monkey. You ask it to grab you a banana and it either immediately shrugs apologetically, or hurries off to get you one - no stupid questions asked.

I'm usually not an advocate of OS wars or particulary enthusiastic about this or that piece of software. Nor are all my suggestions for usage of this or that OS or piece of software soley based on my personal preferences and what I know best.

I'm rather and advocate of this theme: Use the tool that best suits your needs.

Now for the BlueOnyx YUM repositories we used to use the Apache webserver from the start. Because that was what we have on BlueOnyx, too. In fact updates.blueonyx.it was running BlueOnyx 5106R and eventually moved to BlueOnyx 5107R. However, this toplevel YUM repository has always exhibited the same faults and deficiencies that we have also seen with the BlueQuartz YUM repository. To us it happened less frequently, as we - from the start - were providing more than one YUM repository. But after so and so many weeks Apache on that repository would just run out of semaphores and would stop serving requests.

Well ... of course these problems can be mitigated. Comment out all unneeded Apache modules, turn off what doesn't need to be turned on and restart Apache periodically with a cronjob. Or restart the whole bloody VPS frequently.

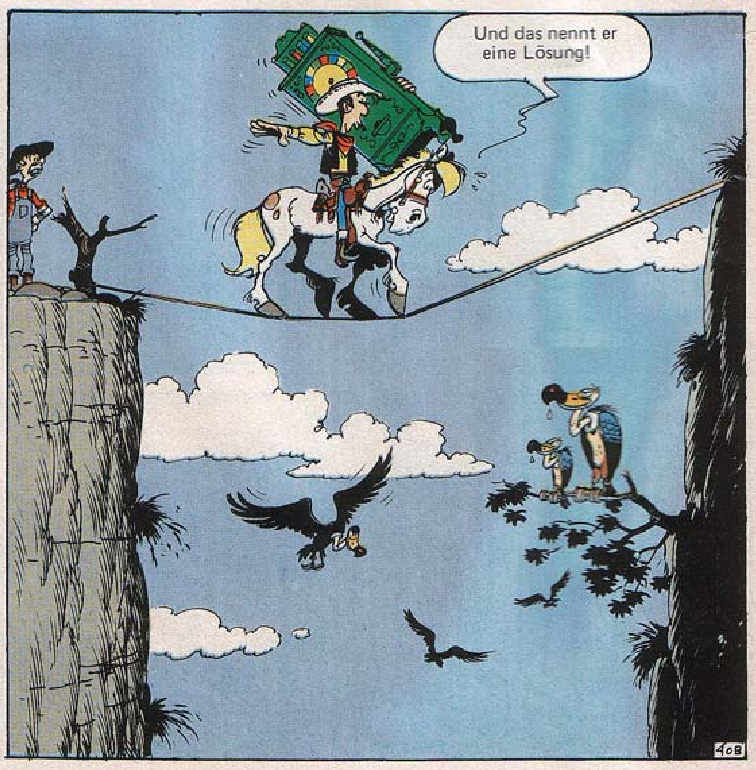

Well, this reminds me of a scene from a Lucky Luke comic that I had read in my childhood:

The caption (in German) reads: "And that's what he calls a solution!"

Sure, you can get Apache to do exactly what you want. Eventually. But is it worth it? Sometimes the answer to that can be: "Not really."

Our updates.blueonyx.it also had other issues: During a certain hour of the day we have +1200 servers hitting it within one hour - according to the statistics we keep. In reality around 800-900 of these "hits" happen within the same small 2-5 minute window. Our hardware can handle it and Apache mostly can handle it as well. After all, there are only three PHP scripts on it (the main mirror.php script that gets hit every time - and two small others which are rarely used).

The rest of the "stuff" that gets called are the repositories XML files and - of course - the most recent RPMs. However, that most intense hour of the day was usually during my sleep cycles and if Apache decided to do a dive, it was usually then and there.

Now we don't exactly need the full functionality that our usual Apache provides. So I looked across the table of things we usually use and oogled nginx.

I set up a new SL-6.3 VPS (minimum install), dropped in nginx, Varnish Cache, PHP-FPM and some dependencies. The configuration was a breeze. I set up nginx to listen on 127.0.0.1:8080 and put Varnish Cache on the public IP and port 80. PHP-FPM is used to serve the three PHP scripts via nginx.

Then I cloned that VPS. On the clone Varnish Cache got disabled and nginx moved to the public IP and port 80. Next I went back to the Varnish Cache config on the first VPS. I set it up in a way that Varnish periodically polls a PHP script on the two nginx servers. The script responds back fine if nginx works and if the connection to the MySQL database backend for statistics and available repositories checks out. Or it reports a 500 server error. If the script checks out without error, then Varnish accepts the backend nginx servers as healthy and will use them to fetch data that is not in the cache. This adds a bit of redundancy, although there are still enough potential single points of failure.

Then I turned on caching itself. The RPM's and the XML files now get cached. The PHP scripts are excluded from caching.

The net result was kinda dramatic: Take that 2-5 minute window when 1200 servers hit updates.blueonyx.it at the same time. They all call the mirror.php script, which is uncached. nginx handles that just fine. Then about 800-900 servers access the XML files, which are all served out of the cache. At best nginx gets accessed just once for that purpose. The rest of the requests are served out of the cache.

Now if there are updated RPMs that the servers want, Varnish might make *one* call to one of the two nginx backend servers and serves the rest of the requests then out of the cache, too.

Server load during that times? Hovering at an idle 0.20 or thereabouts.

All in all it's pretty impressive. Now THAT's what I call a solution! ;-)

Talking about YUM repository statistics:

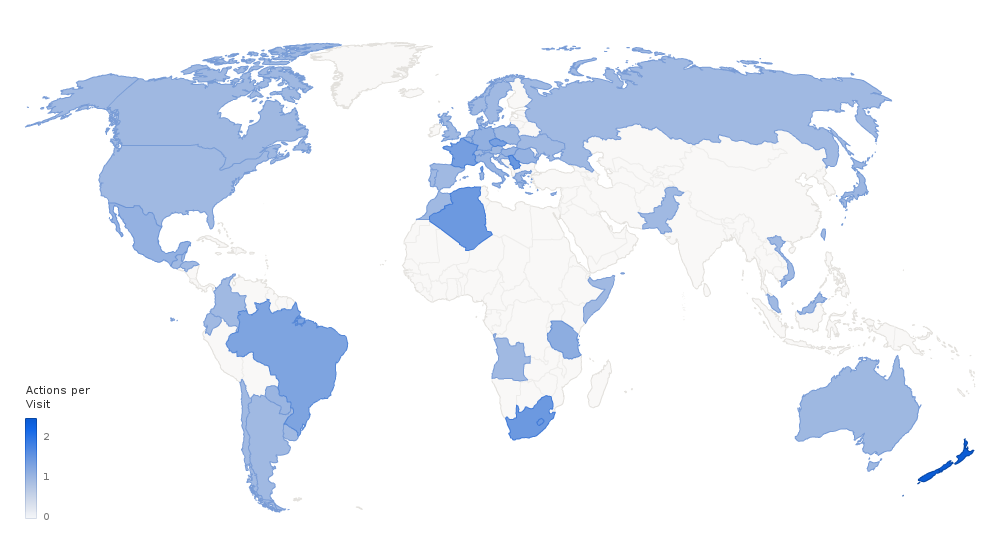

If you ever wondered in which countries BlueOnyx is used, then the above map might give you some ideas. The intensity of the colouring doesn't imply how many or how few BlueOnyx servers are in these countries. The real "per country" distribution looks a bit more like this:

That's just the top of the list. It goes on a for a bit more. And no, the column "visits" doesn't represent the number of servers by country. Just the number of visits of unique IP's to updates.blueonyx.it via YUM in a certain time frame. This statistic doesn't log other accesses to updates.blueonyx.it. The whole Piwik integration there is simply done via Piwik API calls from within the main mirror.php script.

← Return